Cross-Modal Fine-Tuning

by Junhong Shen from Carnegie Mellon University

Topic: Cross-Modal Fine-Tuning: Align then Refine

Abstract:

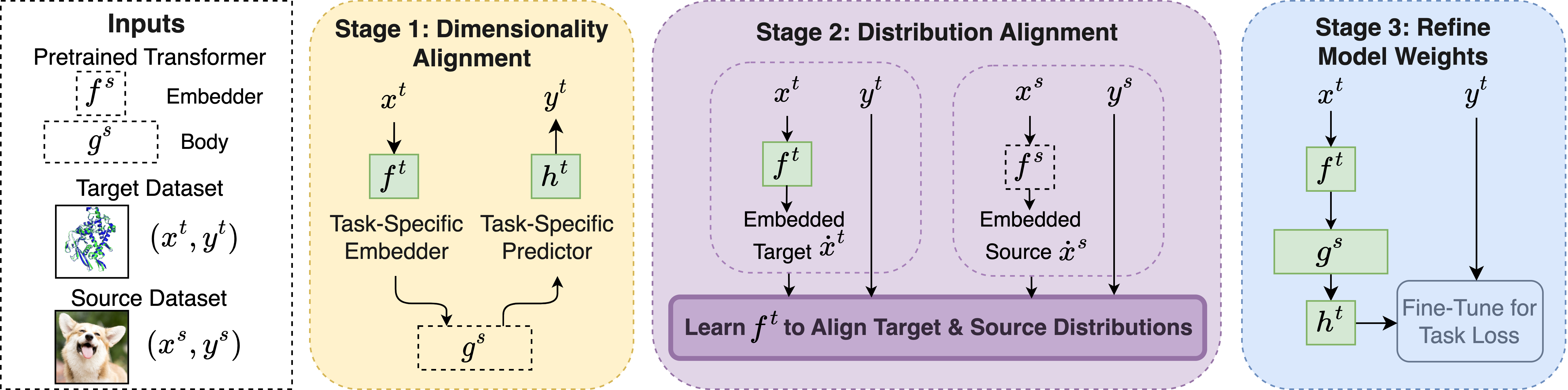

Fine-tuning large-scale pretrained models has led to tremendous progress in well-studied modalities such as Vision and NLP. However, similar gains have not been observed in many other modalities due to a lack of relevant pretrained models. In this work, we propose ORCA, a general cross-modal fine-tuning framework that extends the applicability of a single large-scale pretrained model to diverse modalities. ORCA adapts to a target task via an align-then-refine workflow: given the target input, ORCA first learns an embedding network that aligns the embedded feature distribution with the pretraining modality. The pretrained model is then fine-tuned on the embedded data to exploit the knowledge shared across modalities. Through extensive experiments, we show that ORCA obtains state-of-the-art results on 3 benchmarks containing over 60 datasets from 12 modalities, outperforming a wide range of hand-designed, AutoML, general-purpose, and task-specific methods. We highlight the importance of data alignment via a series of ablation studies and demonstrate ORCA’s utility in data-limited regimes.

| Topic | Cross-Modal Fine-Tuning: Align then Refine |

| Slides | https://docs.google.com/presentation/d/1PVB8Rbikrv80qMUtS4Obbj-58rGjLfDFmsNmGLuPrQU |

| When | 20.03.2023, 15:00 - 16:00 (CET) / 10:00 - 11:00 (EDT) |

| Where | https://us02web.zoom.us/j/85216309906?pwd=cVB0SjNDR2tYOGhIT0xqaGZ2TzlKUT09 |

| Video Recording | TBA |

Speaker:

Junhong Shen is a second-year Ph.D. student in the Machine Learning Department at CMU, advised by Ameet Talwalkar. Her current research focuses on automated machine learning (AutoML), in particular building practical and easily accessible model development tools for diverse applications in real life.

She obtained her B.S. in Mathematics of Computation at UCLA, where she was fortunate to work with Lin Yang on sample-efficient reinforcement learning. She also studied multi-agent RL and Theory of Mind, advised by Song-Chun Zhu and Ying Nian Wu.