Compositional Generative Inverse Design

by Dr. Tailin Wu (Westlake University)

Topic: Compositional Generative Inverse Design

Abstract:

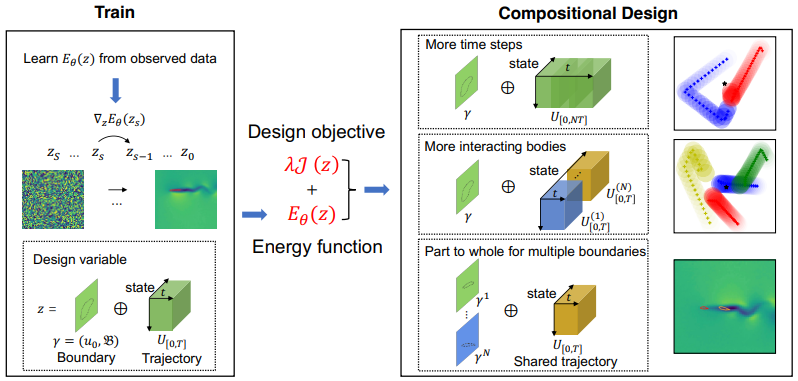

Inverse design, where we seek to design input variables in order to optimize an underlying objective function, is an important problem that arises across fields such as mechanical engineering to aerospace engineering. Inverse design is typically formulated as an optimization problem, with recent works leveraging optimization across learned dynamics models. However, as models are optimized they tend to fall into adversarial modes, preventing effective sampling. We illustrate that by instead optimizing over the learned energy function captured by the diffusion model, we can avoid such adversarial examples and significantly improve design performance. We further illustrate how such a design system is compositional, enabling us to combine multiple different diffusion models representing subcomponents of our desired system to design systems with every specified component. In an N-body interaction task and a challenging 2D multi-airfoil design task, we demonstrate that by composing the learned diffusion model at test time, our method allows us to design initial states and boundary shapes that are more complex than those in the training data. Our method outperforms state-of-the-art neural inverse design method by an average of 41.5% in prediction MAE and 14.3% in design objective for the N-body dataset and discovers formation flying to minimize drag in the multi-airfoil design task.

| Topic | Compositional Generative Inverse Design |

| Slides | https://drive.google.com/file/d/1nLPPtCoSFQJn-ZqofDjPwCiWwATsKPIY |

| When | 19.02.24, 15:00 - 16:15 (CET) / 09:00 - 10:15 (ET) / 07:00 - 08:15 (MT) / 22:00 (BJT) |

| Where | https://us02web.zoom.us/j/85216309906?pwd=cVB0SjNDR2tYOGhIT0xqaGZ2TzlKUT09 |

| Video Recording | TBA |

Speaker(s):

Dr. Tailin Wu (http://tailin.org) is an assistant professor in the Department of Engineering at Westlake University. He received his Ph.D. from MIT, where his thesis focused on the intersection of AI, Physics and information theory, and did his postdoc in Stanford Computer Science, working with Professor Jure Leskovec. His research interests include developing machine learning methods for large-scale scientific simulations and design, and neuro-symbolic methods for scientific discovery, using tools of graph neural networks, generative models, and physics. His work has been published in top machine learning conferences and leading physics journals, and featured in MIT Technology Review. His work has been applied in applications of physics, materials, energy, and scientific discovery. He also serves as a reviewer for high-impact journals such as PNAS, Nature Communications, Nature Machine Intelligence, and Science Advances.